Sanjeevani

MurfAIGradioPythonLlamaGroq

Tuesday, June 24, 2025

1. Project Vision

Sanjeevani was inspired by the need to bridge the global healthcare gap using AI. The vision was to create a universally accessible "digital first-aid" provider that could understand a patient's symptoms from voice, text, or images and provide instant guidance in their native language, making preliminary medical advice more approachable for everyone.

2. Technology Choices

The tech stack was chosen for a lean, real-time, and multimodal AI application:

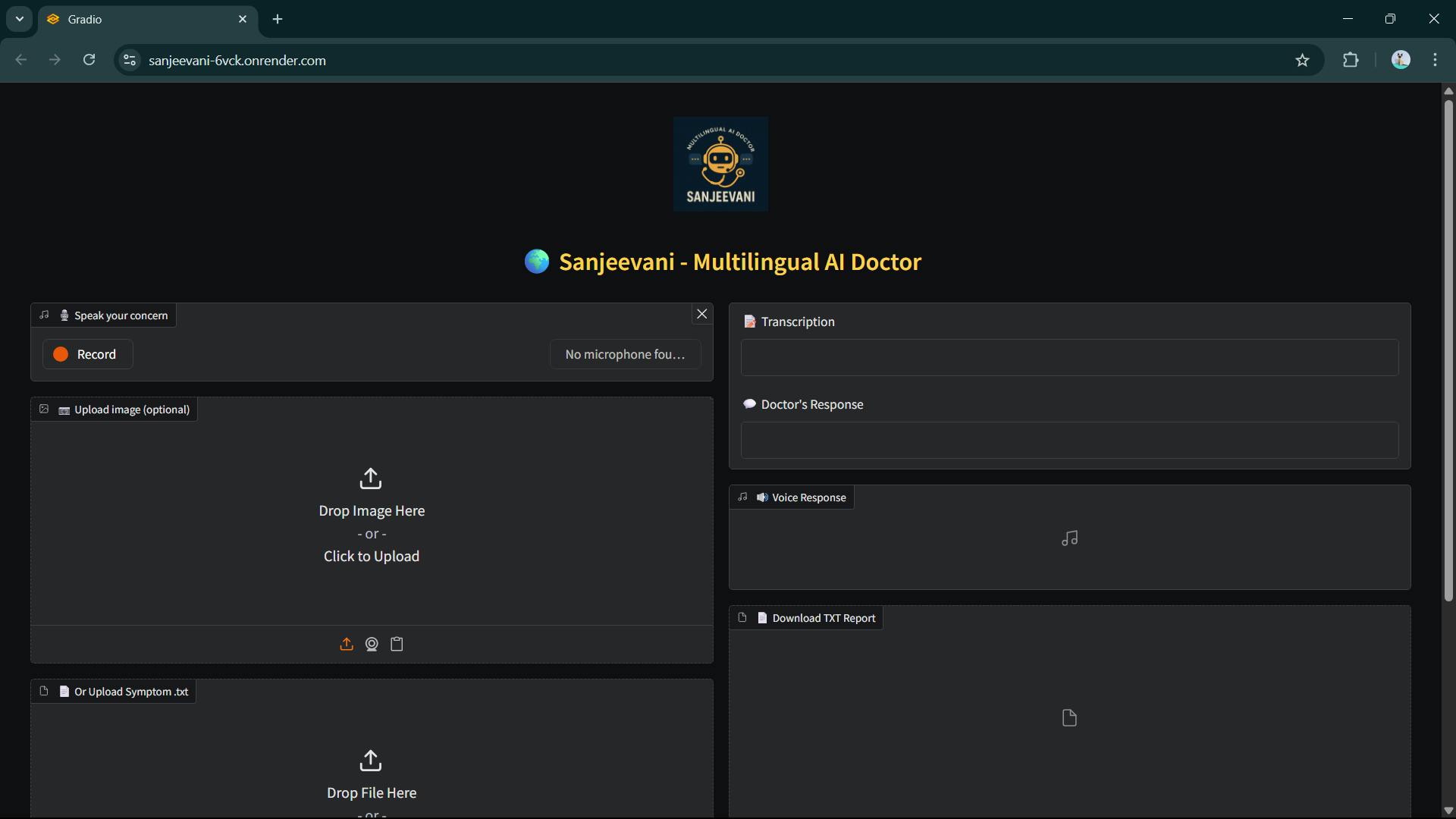

- UI Framework: Gradio was used to rapidly build a simple, interactive UI capable of handling audio, image, and file inputs.

- Speech-to-Text & LLM: Groq's API (Whisper and LLaMA) was selected for its incredibly fast performance, which is crucial for a real-time conversational experience.

- Translation & Text-to-Speech: Murf AI was used for its high-quality voice generation and translation, providing natural-sounding, localized audio responses.

- Audio & Language Handling: The PyDub and langdetect Python libraries were used to process audio files and automatically detect the user's language.

3. Challenges & Solutions

- Real-Time API Orchestration: To manage the chain of API calls without a long delay, the application logic was made sequential, and the Gradio UI was updated with loading states to keep the user informed.

- Accurate Multilingual Flow: To ensure the correct language was used for the final audio response, a mapping layer was created to convert the language code from langdetect to the specific format required by the Murf AI API.

- Secure API Key Handling: API keys were kept out of the source code by using the python-dotenv library to load them securely from a local .env file at runtime.

4. Image Section

5. Final Architecture

The final architecture is a streamlined pipeline that flows from user input to AI-generated output:

- Input Layer: The Gradio UI captures user input in one of three forms: a microphone recording, a text file, or an image upload.

- Processing Core:

Voice/Text Input: Voice is transcribed to text using Groq Whisper. Language is detected from the text.

Image Input: The image is sent directly to the Groq multimodal LLM.

Symptom Analysis: The transcribed text or image is sent to the Groq LLaMA model with a prompt asking it to act as a doctor and analyze the symptoms. - Output Layer:

The LLM's text response is sent to the Murf AI API.

Murf AI translates the text to the user's detected language and generates a high-quality audio file.

The final text response, the generated audio file, and a downloadable text report are all displayed back to the user in the Gradio UI.